Loading content...

Table of Contents

Hornet Tracking AI Mode

The Hornet Detection and Tracking project automates the identification and tracking of hornets in video footage. This project supports ecological monitoring and pest control by streamlining the detection process with computer vision.

Project Overview

This project was developed to address the critical threat that predatory hornets pose to honeybee populations. By attacking beehives, hornets disrupt pollination, reduce honey production, and can decimate entire colonies. The goal was to build a computer vision application capable of detecting hornets near beehives and tracking their movement. The core idea is based on the observation that hornets often fly in a straight line back to their nest after an attack. By tracking this trajectory, the model could potentially help locate and neutralize the hornet nest, offering a proactive solution to protect the bees.

Final Report with Colab Outputs

Methodology and Process

The project was executed in four main phases: data collection, preprocessing, model development, and evaluation.

Data Collection & Preprocessing

High-quality data is the foundation of any effective AI model.

- Data Sourcing: A custom Python scraper using the Beautiful Soup library was built to gather thousands of images of bees and hornets from various online sources, including stock photo sites and videos.

- Annotation & Curation: The Roboflow platform was used to manage, clean, and annotate the image data. The process began with a small test set of 60 images and was incrementally expanded. The final curated dataset included over 5,500 bee images and approximately 2,300 hornet images, creating a robust two-class detection dataset.

- Challenges: A key challenge was the initial scarcity of hornet images and the model's occasional confusion between the two insects. This was addressed by continually sourcing and labeling more data to improve distinction.

Model Development & Training

To find the best solution, we trained and evaluated two state-of-the-art object detection architectures. The models were trained on Google Colab to leverage its powerful GPU capabilities. The final dataset was split into:

- Training Set: 1,510 images

- Validation Set: 431 images

We experimented with the following models:

- YOLOv8: A fast and efficient model known for its balance of speed and accuracy.

- YOLOv7: A slightly older but still powerful model, used as a performance benchmark.

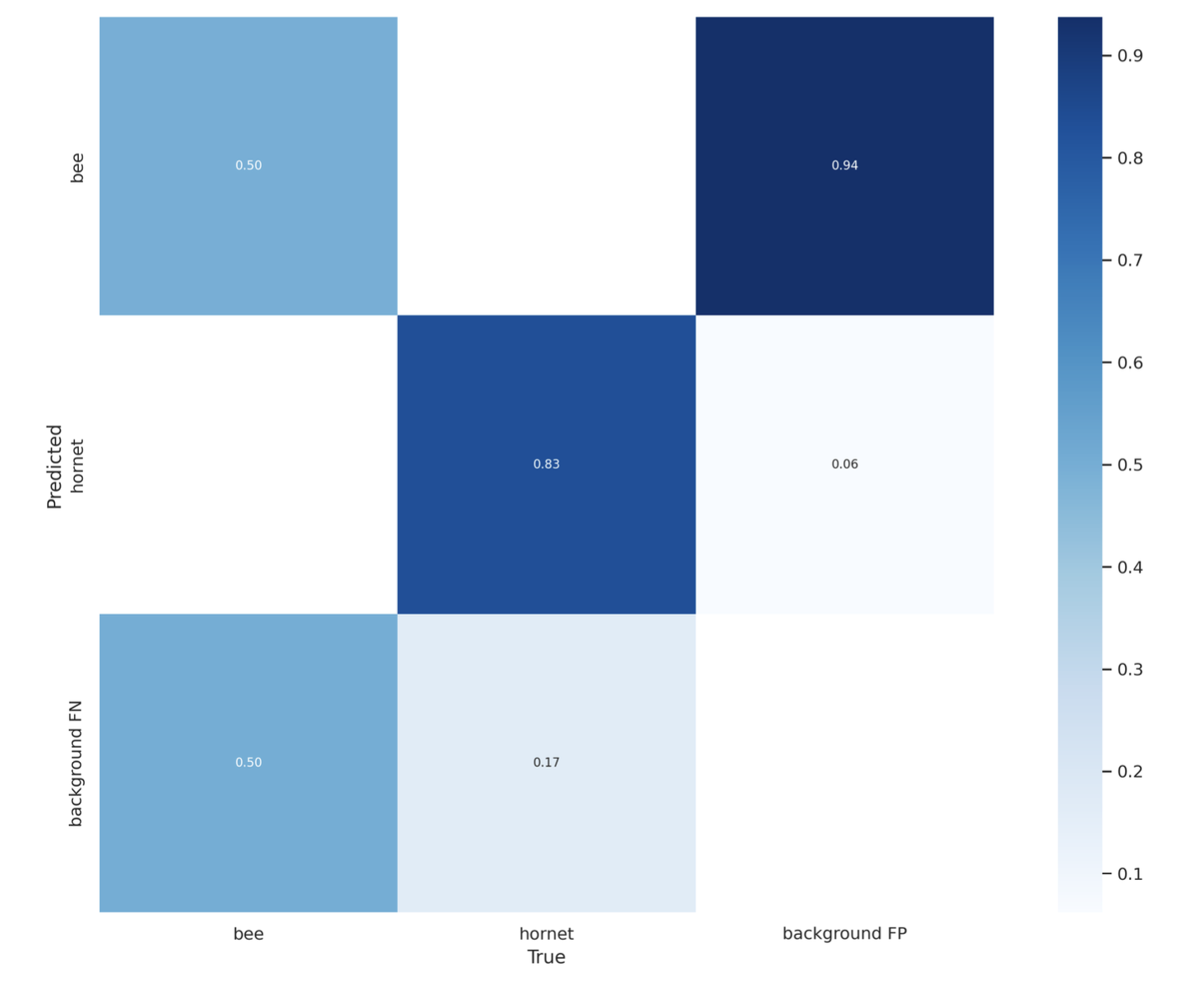

Evaluation and Results

Both models successfully learned to detect and differentiate between bees and hornets in images and video streams. The YOLOv8 model achieved a mean Average Precision (mAP@50-95) of 0.586 across both classes.

- Hornet Detection: The model performed particularly well in identifying hornets, with a mAP of 0.67.

- Bee Detection: The model achieved a mAP of 0.47 for bees.

The model demonstrated impressive real-time performance, processing video frames in as little as 11-20ms, making it suitable for live deployment. It successfully identified and tracked insects in test videos, drawing bounding boxes around them frame by frame.

Outcomes and Future Work

This project resulted in a functional AI model capable of detecting and tracking hornets to protect beehives.

Key Achievements:

- Successfully built and trained a model to detect both bees and hornets in real-time video.

- Developed a complete data pipeline, from scraping and annotation to model training and evaluation.

- Gained significant hands-on experience with industry-standard tools like Roboflow, YOLO, and Google Colab.

Future Improvements:

While the core objectives were met, there are several areas for future enhancement:

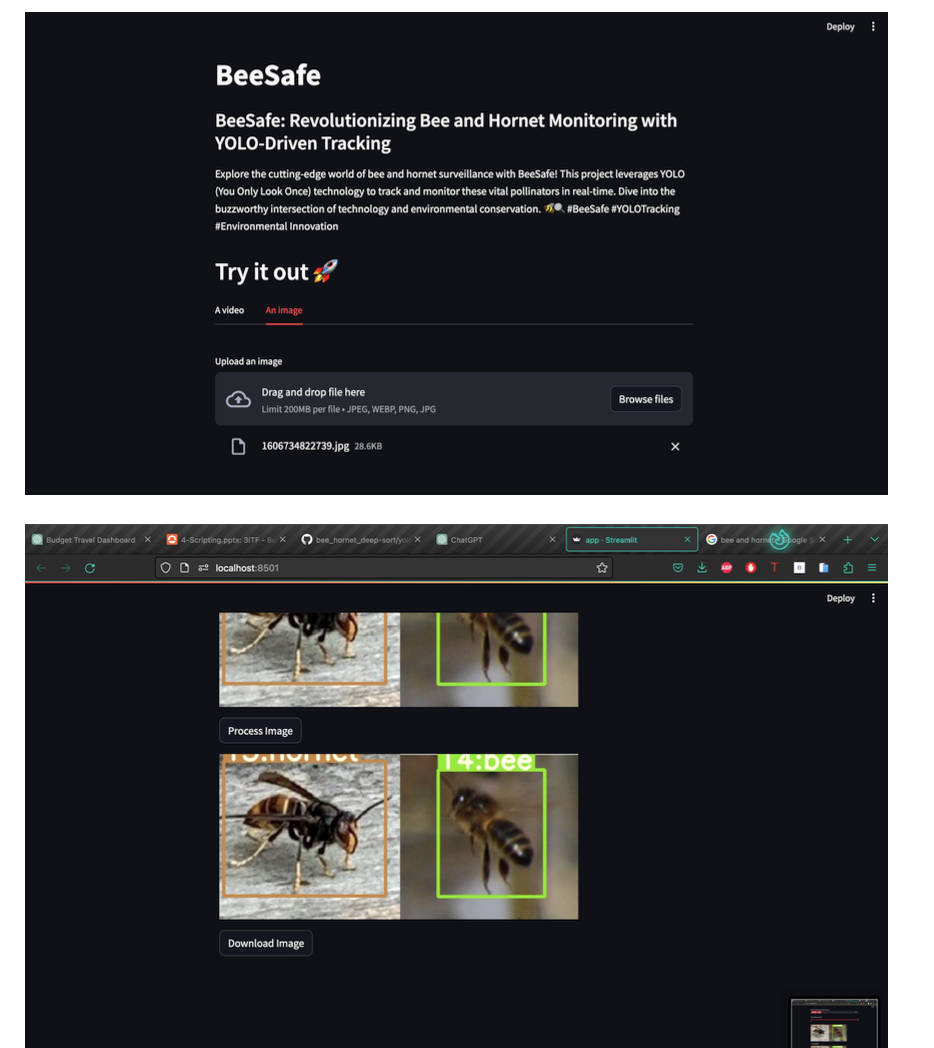

- Model Deployment: The final step is to deploy the model into a user-friendly Streamlit application. This would allow users to upload their own videos or use a live webcam feed for analysis.

- Model Deployment: The final step is to deploy the model into a user-friendly Streamlit application. This would allow users to upload their own videos or use a live webcam feed for analysis.

- Dataset Refinement: Further improve model accuracy by adding more hornet images and balancing the dataset to better represent insects at various sizes and distances.

Personal Reflection & Key Learnings

This project was a comprehensive, hands-on journey through the entire machine learning lifecycle, from ideation to a functional prototype.

- Data Is Paramount: My single biggest takeaway is that the quality and quantity of data directly govern a model's performance. The extensive effort spent scraping, cleaning, and meticulously labeling images was not just a preliminary step—it was the most critical factor in the model's success.

- The Power of Iteration: We didn't get it right on the first try. The process of starting with a small model, identifying its weaknesses (like confusing bees with hornets), and iteratively improving it by adding more targeted data taught me a valuable lesson in practical problem-solving.

- Bridging Theory and Practice: It was incredibly rewarding to apply theoretical knowledge of computer vision to a real-world problem. Managing dependencies, debugging training scripts in Google Colab, and interpreting performance metrics like mAP provided practical experience that goes far beyond textbook learning.

- Understanding the Full Scope: While we successfully built the model, the challenges with deployment highlighted that a project isn't "done" after training. It reinforced the importance of considering the entire pipeline, including resource management and deployment, from the very beginning.